We are often asked by customers and prospects: what is the best cloud data warehouse technology for handling analytics workloads? The reality is that all cloud data warehouses have different and competitive price and discount models, performance characteristics depending on your workload type, scalability options and security mechanisms. Cloud providers have come a long way and are now considered one of the most safe and convenient solutions to store any amount and type of information.

After all, the basic techniques for making a fast columnar data warehouse have been well-known since the C-Store paper was published in 2005. There are many publicly available benchmarks comparing these cloud data platforms and most are conducted using a variation of the industry standard TPC-DS specification. The cluster configuration, selected and modified queries with different data sizes, data partitioning, data compression techniques and various tuning optimization are all criteria that can be challenged during the evaluation of results.

So which cloud warehouse is right for you?

The similarities between these powerful data warehousing systems are greater than their differences if you are considering running your analytics workload in the cloud. Having said that, here are a few important nuanced distinctions:

BigQuery:

- Charges for data storage, streaming inserts, and for querying data based on bytes scanned per query. However, loading and exporting data is free of charge. Offers cost control mechanisms and long-term pricing model as well.

- Capable of processing queries across Google Cloud storage, Bigtable, or spreadsheets in Google Drive.

- Google undergoes independent verification of security, privacy and compliance controls achieving certifications against global standards.

- Serverless Petabytes scale data warehouse providing resources when needed. “Set and Forget” model without the need to operate and size the computing resources.

- BigQuery integrates with a smaller ecosystem, Cloud Dataproc and Cloud Dataflow.

Learn How AtScale Improves Efficiency on BigQuery

Redshift:

- On-demand and reserve instance pricing on a per-hours per-node which covers both compute power and data storage. Amazon Redshift Spectrum pricing is additional and is based on the bytes scanned.

- Spectrum can run complex queries on data stored in S3 as-is, enabling the scaling of the compute and storage independently.

- Amazon has an extensive integrated compliance program in addition to the database security capabilities.

- Minimal administration required. Create cluster, select instance type and manage scaling.

- Amazon Redshift integrates with a variety of AWS services and AWS has the largest cloud ecosystem of capabilities.

Learn How AtScale Improves Efficiency on Redshift

Snowflake:

- A minimum of one minute’s worth of Snowflake credits is consumed when a virtual Warehouse is started. Virtual Warehouses are charged on a per second basis thereafter. Data storage and compute are charged separately based on different tier and cloud providers.

- Account-to-account data sharing enabled through database tables, secured views and secure UDFs..

- Deployed on AWS or Microsoft Azure secured and compliant platforms.

- True Software-as-a-Service integrated with data storage, query processing and cloud services. “Set and Forget” model.

- Wide ecosystem of 3rd-party partners and technologies.

Learn How AtScale Improves Efficiency on Snowflake

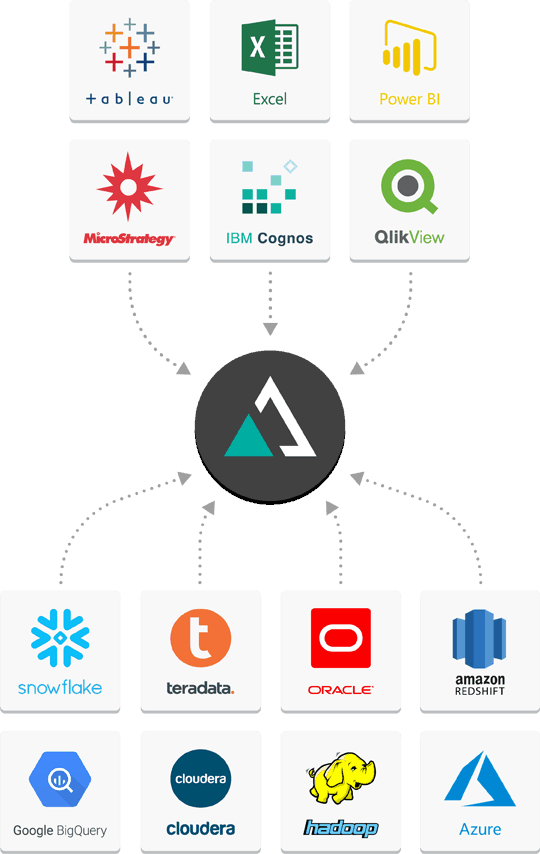

All these cloud warehouses have the ability to store a vast amount of data, however, storing data in one place isn’t enough to achieve the vision of a data-driven organization. In order to benefit from a shared data asset most organizations need to provide a wide range of interfaces, including both SQL and MDX (multidimensional expression language) modes for users to easily consume their data.

That’s where AtScale comes in

Delivering interactive performance via multiple business interfaces while managing cost remains a challenge, even in today’s new cloud data warehouses.

The AtScale Intelligent Data Virtualization

AtScale’s Intelligent Data Virtualization is the only product on the market today that supports natively both SQL and MDX live query processing capitalizing on the reporting services invested in the enterprise, including Microsoft Excel. Data modelers can design virtual cubes using familiar workflows and intuitive drag-and-drop interactions in creating hierarchies, measures, sophisticated calculations and virtual data enrichment.

Perhaps one of the most important critical uses of intelligent data virtualization is that it isolates the business users from their enterprise digital modernization efforts. The data can be moved to a new data platform without compromising or disrupting the business and their ability to deliver critical insights.

Move beyond data extracts, lengthy cube building processes, or complex data pipelines. Instead give your users a business-friendly interface without turning them into data engineers while providing the proper governance, data lineage using a live connection to your data without any data movement.

And performance?

AtScale is able to greatly improve performance by delivering faster time to insight and by building and maintaining intelligent aggregate tables using advanced machine-learning algorithms to optimize the query patterns and deliver the expected performance that users are expecting. These aggregation aware tables eliminate scripting, tedious query performance tuning and redundant summary tables while providing full visibility and control.

Avoid costly access to the raw data thus increasing the throughput, concurrency, performance and significant cost reduction.

Cost control

Irrespective of the cloud warehouse of your choice, delivering interactive performance while managing cost remains a challenge.

AtScale’s unique aggregate-aware feature will drastically reduce compute and storage costs independent of the pricing model while leveraging your Business Intelligence investments.

SHARE

Power BI/Fabric Benchmarks