April 15, 2020

When Everything You Do Becomes Data, OLAP is More Important than Ever

*Author’s Note: This is an update to the blog post “The 1990’s Called, They Want Their OLAP Back,” originally published in June 2020.

For the modern cloud-first data pro, the terms “OLAP” and “cube” are dirty words. That said, many large enterprise data teams still have mission critical analytics based on legacy OLAP technologies. I founded AtScale with the goal of creating a modern, more elegant approach to accelerating analytics that leveraged the power of underlying cloud data platforms (vs. extracting data into a separate cluster). Over the years, I have talked to many teams struggling to break the chains of entrenched OLAP-based applications. The most common questions we get from seasoned OLAP veterans include :

- How do you handle data explosion?

- How do you handle Big Data?

- How long does it take to process the cube?

- How long does it take to make changes, like adding a dimension?

- Do we have to move data?

- How long? How long? How long?

Clearly, these OLAP admins and power users had been living with some pain. To add insult to injury, OLAP solutions are based on the premise of “speed of thought” response times. But during an era of exponential data growth, traditional OLAP solutions get bogged down with large data sets. Data users lowered their expectations from, “can we get it under 15 seconds?” to,“can I get it by lunch?”. This is why I founded AtScale. The world needed a cloud-first approach to high performance analytics – some refer to this as cloud OLAP.

H2: A Brief History of SSAS and OLAP

In 1992, Arbor Software shipped the first version of Essbase,which stands for Extended Spreadsheet Database.

In 1998, Microsoft shipped Microsoft SQL Server Analysis Services, bringing tmulti-dimensional databases into full being. Almost 30 years later, these OLAP engines are very much still in play. If you want to geek out, learn more about Dr. Edgar F. Codd, who coined the term OLAP (Hint: It doesn’t stand for OLD Analytical Processing!)

Databases up to this point were essentially two-dimensional: made up of columns and rows. They also required a techy query language (SQL)to retrieve data. With the onset of OLAP, business users could ask dimensional questions of the data and get “speed of thought” answers —all without learning a query language. They could simply go into Excel and drill-down, pivot, and swap dimensions and measures. Instead of needing to parse through general records, fields, and facts, any user could look at more relevant breakdowns, such as at “Sales by Region”, “Sales by Product”, “Sales by Channel”, Sales by Market”, or they could filter by a time period. These “by’s” made OLAP a powerful and multidimensional resource for businesses at the time. The ability to just drag and drop, drill down, and drill up — all within Excel with the click of a mouse (versus writing SQL) — was game-changing. It’s why OLAP became the rage of the 1990s and, in large part,still is today.

Theoretically, these OLAP engines had no dimensional limit. But in the real world, it quickly became evident that the movement of data off-source, the cardinality of dimensions, the level of intensive calculations, and the size of data adversely affected performance. In the early days, approaching ten to thirteen dimensions began to degrade query performance. Changing the “cube,” updating the data, and calculating data took hours — if not days. These challenges became acutely apparent as things progressed. And this was even before the introduction of true Big Data!

H2: Challenges of OLAP & Cloud Data Platforms

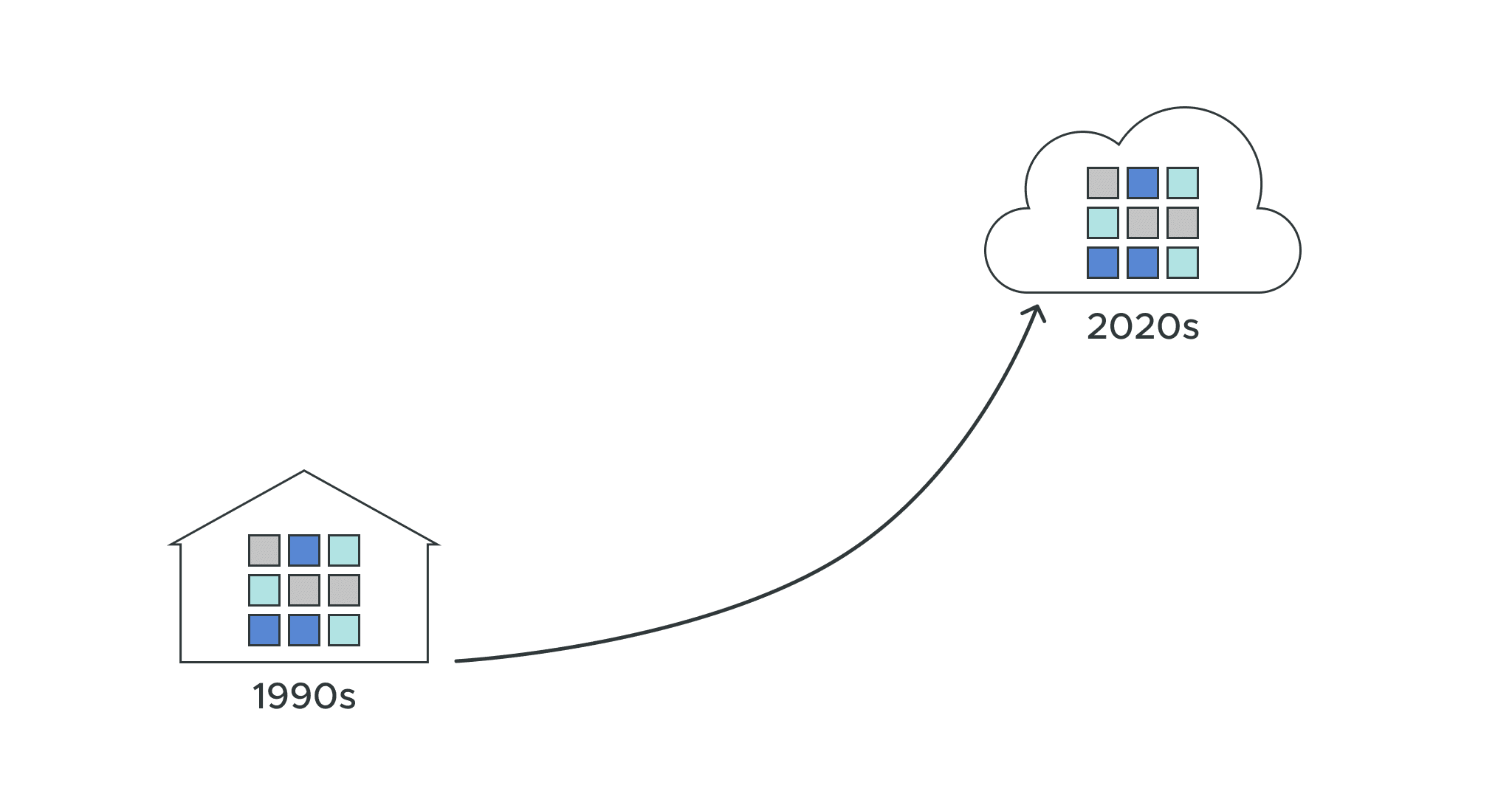

As we move into the 2020s, Big Data and cloud databases like Snowflake, Databricks and Google BigQuery are becoming the standard. But OLAP cubes are still widely in use and literally “exploding” from the size data. For example, I ran a 24-terabyte SQL Server Analysis Services Cube at Yahoo. It took seven straight days of non-stop computation to build the cube, then several weeks to make any type of change. What’s more, enterprises are starting to move their data to the cloud. This cloud migration means that organizations expect instantaneous access to lots of data.

H2: Towards a Brave New OLAP: Cloud OLAP!

Here at AtScale, we needed to answer a big question about OLAP: How do we handle large amounts of data, models with large numbers of dimensions and measures, and the absolute need for interactive query performance?

We needed to create something different from last century’s OLAP. Today’s businesses need cloud OLAP. We began to ask, “how do we take advantage of the new cloud data platforms that have virtually unlimited scale? How do we keep the things that worked in he original OLAP solutions but ditch what didn’t work? And how would we make OLAP scale with today’s data?”

H3: OLAP Modernization in an Era of Big Data

I’ve seen many attempts to reboot OLAP over the last few years. Most have failed or, worse,created even more complicated environments. To see what I mean by a “complicated environment”,all one needs to do is look at the current cloud analytics ecosystem. It’s enough to make one wish for the 1990s big hair metal bands to come back.

Some companies try to deal with their large scale cloud data ecosystems bypre-aggregating data (making it small), moving it off-platform, relying on many different technologies, and/or extracting it into various reporting tools. This gets complicated quickly. On top of this, businesses that deal with their data in this way are likely in violation of corporate governance and they then have the pleasure of fighting over whose numbers are right . It becomes just as bad, or even worse, as an outdated OLAP solution but somehow more complicated.. Many vendors are pushing for this approach: move data, index data, and calculate all intersections. It’s the same old OLAP issues with a bigger problem: massive volumes of data.

H3: AtScale’s Approach to Cloud OLAP

So how does AtScale solve this problem? We use an approach called Query Virtualization. We leave the data where it landed and leverage the cloud data platforms’ scale coupled with intelligent aggregates and a multi-dimensional interface to deliver a modern OLAP experience. To the end user of Tableau, Power BI or Excel, we look like the good old big hair bands we all knew and loved back in the early 90’s, but without all the limitations. This is because we aren’t storing and pre-aggregating every single data cell. Users can still see their business by Time, by Region, by Market, etc. They can still drill down, swap, and pivot. Most importantly, they still have their performance without sacrificing scale.

AtScale’s universal semantic layer delivers a thin, virtualized layer which sits on top of your data platform. From there, this layer intercepts inbound SQL or Multidimensional Expressions (MDX) from various BI tools, then converts it all into cloud data platform queries to access the data where it landed with SQL. There is no data movement. Instead, AtScale creates acceleration structures stored on the cloud data platform, providing an order of magnitude better query performance. We leverage the power of distributed compute with these various platforms and only store smart slices of data informed by user query behavior. These acceleration structures are stored right next to the raw data.

This means a couple of things. First, you gain a single source of truth through the Universal Semantic Layer (meaning no more fighting over whose numbers are correct!) This approach to OLAP also ensures data governance because there is no additional copies of data. Plus, data access rules are applied to every query in realtime, ensuring consistent data governance. Additionally, because of AtScale’s virtualization architecture, you no longer have the restrictions of yesteryear. I’ve seen models with thousands of dimensions and measures.. You won’t need to buy bigger servers in order to, as Ricky Bobby says, “go fast.” You don’t scale up;you scale across, again, leveraging the power of the underlying cloud data platforms. And if you decide, as many of our customers do, to move from an on-premises Hadoop cluster or traditional data warehouse to a cloud data platfom it’s a simple plumbing redirect, not a months-long project with painful end-user disruptions.

It’s like the best music from the early 1990s minus the big hair. Cloud OLAP is a masterpiece movie from the past, digitally remastered to take complete advantage of modern capabilities.

Want to learn more about our semantic layer — the key to a modern cloud OLAP? Check out a case study with Betclic, one of AtScale’s customers who used a semantic layer to modernize their outdated OLAP for cloud analytics.

ANALYST REPORT