How analysts consume data is a key factor in determining how quickly, accurately, and efficiently stakeholders can make data-driven decisions. Without the ability to freely and speedily share data insights, it can take a lot of time, effort, and expertise to come up with better-informed business strategies.

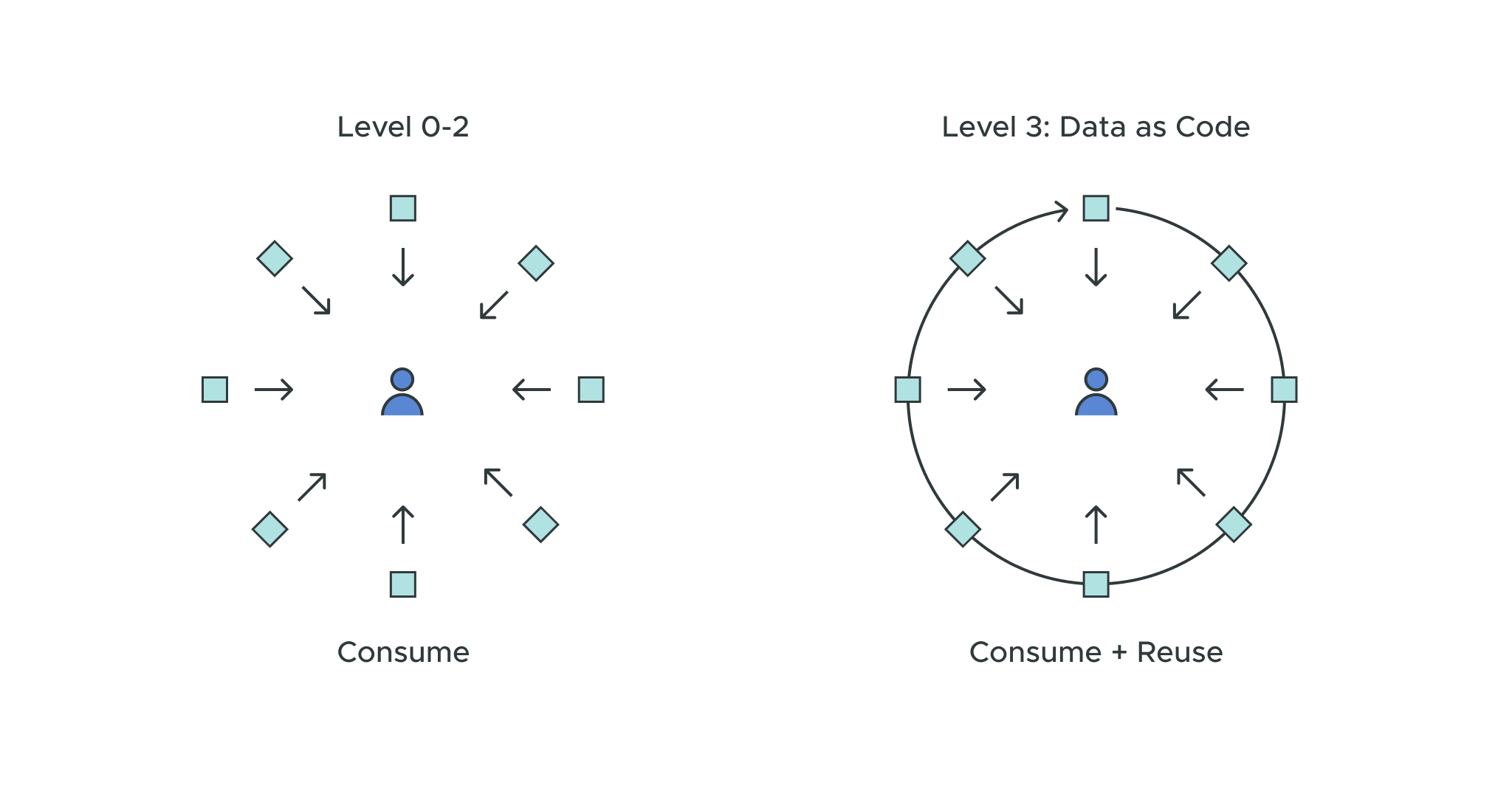

Data-mature organizations ingrain data consumption into the very fabric of their daily operations. How? By deploying “data as code.” That means data analysis is baked into the entire enterprise – allowing for a continuous cycle of collection, aggregation, and analytics.

In Module 8 of AtScale’s Data & Analytics Maturity Workshop, we’ll discuss the four methods of data consumption as they relate to each maturity model:

- Level 0: Initial – Query

- Level 1: Procedural – Dashboards

- Level 2: Proactive – Self-service Analytics

- Level 3: Leading – Data as Code

We’ll run through how each of these consume capabilities plays out, the pros and cons of each, and reveal how using a semantic layer can unlock the benefits of deploying “data as code” throughout the entire enterprise.

Level 0: The Initial Phase of Data and Analytics Maturity / Query

Level 0 requires a high level of expertise. The query capability is the main (if not only) method of consuming data in which data analysts manually query the data with data management languages like SQL and MDX.

Querying is one of the oldest methods of data consumption. It requires data analysts to not only have a deep understanding of their enterprise’s data models but also to know how to create, update, search, and destroy new tables, models, and data points by using these programming languages.

It’s also immensely difficult to share data pulled from queries. To bypass programming skill roadblocks, some organizations may grant access to SQL worksheets to share their data across roles and departments.

This might seem like an easy fix, but using SQL worksheets to share data is dangerous. When someone is given an SQL worksheet, they then have access to the SQL code used to manage their enterprise’s data. That grants them the ability to modify or change information in the database, which could lead to bad data.

These factors all make querying as a method of data consumption very risky, time-consuming, and hard to implement.

Level 1: The Procedural Level of Data and Analytics Maturity / Dashboards

At the procedural level, data consumption experiences some amount of progress. Rather than solely relying on queries to consume data, organizations expand their consume capabilities by introducing a graphical layer – or dashboards – to represent data. Popular tools like Tableau and Power BI are great examples of data dashboards.

The benefit of dashboards is that once they’re set up, users aren’t required to have any knowledge of data query languages. Plus, dashboards don’t require any skill to access them or interpret their data – only a predetermined impression of KPIs. This makes the data in dashboards a lot more accessible and sharable. However, creating the dashboard requires data analysts to not only have knowledge of query languages but also have knowledge of how to use the tool.

By nature, dashboards are always backward-looking. They’re retrospective snapshots of data that might already be irrelevant. That’s why organizations stuck at Level 1 inevitably need to progress to less static methods to enhance their data-driven insights.

Level 2: The Proactive Stage of Data and Analytics Maturity / Self-service Analytics

Graduating from the procedural to the proactive level, organizations become all about self-service data analytics.

At Level 2, data analysis is no longer retroactive. Here, organizations start using ad hoc analysis to explore their data. Self-service analytics is capable of unveiling forward-looking decisions and is very flexible.

This level finally introduces the power of the semantic layer, which provides context for all data – new, old, or modified – throughout the enterprise. It also improves data quality, analysis, and accessibility. Users are no longer required to be experts with their data model nor create their own calculations, programs, or code to consume data. With that in mind, it’s safe to say that Level 2 is a healthy place for organizations to be when it comes to enhancing data-driven decisions.

Level 3: Data and Analytics Leaders / Data as Code

If Level 2 is a healthy goal, why should organizations aim to move on to Level 3, the leading model? Here’s the simple fact: Level 3 opens up exciting opportunities for organizations to go above and beyond with data consumption. At this level, data is not only openly accessible – it is also reusable.

Organizations at Level 3 are ready to make big choices, create industry-leading changes, and stay ahead of the competition.

This is where organizations can start to leverage “data as code.”

What is Data as Code?

“Data as code” allows teams of any size, focus, or department to process, manage, share, and consume data continuously throughout the data cycle. It treats data the same way that developers treat code during software development – with an eye for continuous integration and deployment.

With that level of flexibility, data is:

- Embedded into models and the semantic layer

- Accessible from anywhere, including APIs, query tools, and even SQL commands

- An active part of your stakeholders’ decision-making process

The Unmatched Power of Deploying Data as Code

Deploying “data as code” moves beyond the proactive approach. By using “data as code,” all end users can be empowered to take control of their data, increasing collaboration and accelerating iterations. This opens up a whole new world for DataOps.

With “data as code,” analytics are continuously deployed throughout the enterprise. That continuous deployment unlocks continuous and autonomous consumption. Data never goes stale because it’s always being shared, updated, and accessed. So, how can organizations tap into the power of “data as code?”

Best Practice: Integrate Data as Code with a Semantic Layer

Semantic layers unlock the context that organizations need to deploy “data as code.” And together, “data as code” and semantic layers empower data consumers. With users of all skill sets and experiences able to freely access, understand, and consume data, data-driven decision-making is more efficient and collaborative than ever.

Achieving Level 3 in the data maturity model might seem like an almost-impossible task. But with the power of AtScale’s semantic layer, deploying “data as code” can become a likely reality.

Watch the full video module for this topic as part of our Data & Analytics Maturity Workshop Series.

SHARE

Case Study: Vodafone Portugal Modernizes Data Analytics