September 26, 2024

AtScale Unveils Natural Language Query Capabilities, Revolutionizing Data Access for Business Users

Generative AI, or GenAI, is a powerful new technology capable of answering real business questions without requiring the user to consult an analyst or review a BI report. This capability, called Natural Language Querying or NLQ for short, has historically struggled in a few key areas, but combining this technology with the Semantic Layer and Query Engine of AtScale has shown to provide an elegant solution to these problems. With this technology combined, AtScale has been able to match or exceed the performance seen in industry-leading NLQ products, all without exposing any underlying data to the LLM.

Traditional Issues Facing NLQ Products

In the current market, NLQ products are built on the back of what is known as a Large Language Model, or LLM. At a glance, an LLM is built to understand the relationship between words. Think of a supercharged version of your phone’s autocomplete functionality. For autocomplete, given the previous set of words and the history of the messages you typically send, it can provide suggestions for the next few words. This is more or less what an LLM does but on a vastly larger scale. Tools like ChatGPT and Microsoft Copilot are popular examples of LLMs.

There are several uses for LLMs, but the NLQ use case has become increasingly viable in recent months. This is partly because LLMs are getting more powerful but also because research has found that a Semantic Layer may address two of the key issues historically seen in NLQ solutions. These issues have been a persistent thorn for LLMs and can be described as:

- Mapping a business term to a warehouse column or specific calculation

- Understanding how to build the SQL joins required by the query

Both of these issues come down to the LLM needing additional context to answer a question. To better understand this issue, say you asked an NLQ product a question like “What were the previous week’s units sold in the state of New York?” Answering this question would require a few key pieces of information.

First, how does your company define the previous week’s units sold? While this might seem like a straightforward calculation, customer A might define this metric as the sales over the previous 7 days, while customer B might define this as the sales over the last full Sunday to Saturday period. There are several ways to define this metric, but without the context of how your specific business defines it, an LLM will rely on its best guess on how to define it. This can lead to inconsistencies in reporting to the end users.

Second, what column in the company’s data warehouse should the filter for the state of New York be applied to? While a user may think of this entity as ‘state’, the warehouse column in the database may be named ‘st_name’, ‘full_name_state’, or any other name, depending on the engineer who made the table. Without additional context, the LLM will again have to rely on its training data to make its best guess at what your company is named in this column.

Finally, say that your company stores sales information in a separate table from the location information, as in most modern data warehouse designs. In this case, the LLM must now try to deal with joins and other multi-table operations. These operations require information about columns that would not even be mentioned in the question, meaning an LLM would have almost no chance of successfully writing this query without more context.

Luckily, all of these difficulties can be alleviated by coupling the LLM with AtScale.

Introducing the AtScale Semantic Layer

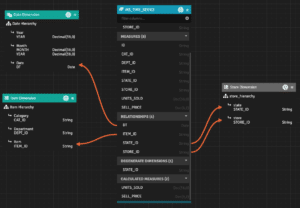

The AtScale Semantic Layer provides an intuitive environment for a business to define all of its unique KPIs and the relationships between those KPIs. AtScale’s Universal Semantic Layer has been developed over the last decade to support the work of BI users, but it also provides a treasure trove of context that can be leveraged by an LLM.

Similarly to how the structure of the Semantic Layer is provided to BI tools like Tableau and PowerBI, we can provide it to the LLM as additional context in our NLQ product. This means that when asked the same question, “What was the previous week’s units sold in the state of New York?” the LLM will be able to pull in the customer-specific definition of the previous week’s units sold, know what column in the warehouse “state” refers to, and could in theory create the SQL code necessary to join our two tables. This is just a theory, as this join issue is actually completely removed by leveraging AtScale’s Query Engine!

Introducing the AtScale Query Engine

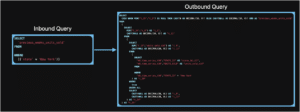

AtScale’s Query Engine is another existing resource that can be leveraged to benefit NLQ tasks. Traditionally, the Query Engine enabled AtScale users to connect to their Semantic Model from any BI tool and data warehouse and gave them the flexibility to switch anytime. To accomplish this, queries submitted against a Semantic Layer are done as an Inbound Query. Inbound Queries are submitted as PostgreSQL, where the queries all reference a single logical table where all objects in the Semantic Layer are represented as columns. Once an Inbound Query is submitted, AtScale will use the definitions of the KPIs and joins defined in the Semantic Model to translate the query into Outbound SQL, which is then executed against the underlying Data Warehouse. In practice, this translation vastly simplifies the queries needed to answer complex BI questions.

Interestingly, this same technology can also be applied to our NLQ use case. By tasking the LLM to create the Inbound Queries and leveraging this same workflow, we can vastly simplify the problem the LLM is trying to solve. Since Inbound Queries are made against a single logic table, we can completely remove the need for the LLM to do joins or any multi-table operations. In addition, since the AtScale Query Engine will handle the translation between logical column name and KPI, the LLM will never have to generate complex business logic. This simplifies the question, transforming even the most complex NLQ question into something almost trivial for the LLM.

By combining the simplification benefits of the Query Engine with the additional contextual information of the Semantic Layer, AtScale enables NLQ products to perform at market-leading levels.

Where Next

Look for a deeper dive into the AtScale NLQ integration in our upcoming whitepaper. There, we will provide greater technical detail on how our NLQ product was built and how it excels against other market-leading NLQ products. Even more excitingly, keep an eye on the AtScale socials as we begin showing off our NLQ product later this summer!

How Does Power BI / Direct Lake Perform & Scale on Microsoft Fabric