The growing popularity of big data analytics coupled with the adoption of technologies like Spark and Hadoop have allowed enterprises to collect an ever increasing amount of data – in terms of breadth and volume. At the same time, the need for traditional business analysis of these data sets using widely adopted tools like Microsoft Excel, Tableau, and Qlik still remains. Historically data is provided to these visualization front ends using OLAP interfaces and data structures. OLAP makes the data easy for business users to consume, and offers interactive performance for the types of queries that the business intelligence (BI) tools generate.

However, as data volumes explode, reaching hundreds of terabytes or even petabytes of data, traditional OLAP servers have a hard time scaling. To surmount this modern data challenge, many leading enterprises are now in search of the next generation of business intelligence capabilities, falling into the category of scale-out BI. In this blog I’ll share how you can leverage the familiar interface and performance of an OLAP server while scaling out to the largest of data sets.

Why SSAS?

Microsoft SQL Server Analysis Services, or SSAS, is one of the most broadly adopted business intelligence platforms in the world and is by far the market-leading multi-dimensional OLAP (MOLAP) Server. While exact market share statistics are hard to come by, it’s likely that SSAS is installed at almost every Fortune 1000 company. In this article we’ll take a look at the characteristics of SSAS that created this market success, share some of our own experiences with SSAS at larger enterprises (like Yahoo!), and also discuss the need for a new approach to scale-out multi-dimensional analysis.

A few key reasons SQL Server Analysis Services achieved prominence in the world of business intelligence:

- Native integration with Excel: As detailed in this great post, SSAS has native integration with Microsoft Excel (the most popular BI tool in the world). This means that any Excel user, with access to a SSAS cube, can slice, dice, analyze, and drill down on data to his or her heart’s content.

- Expressive MDX support: MDX (Multi-dimensional Expression Language) provides excellent support for higher level business intelligence functions, and is more efficient for these types of queries than traditional SQL.

- Rich schema representation via XMLA: In addition to supporting the MDX query language, SSAS (and other OLAP providers) can express complex OLAP models (hierarchies, measure groups, relationships, drill downs) by exposing cube metadata using something called XML for Analysis (XMLA). This allows any BI tool that connects to SSAS to execute metadata queries and represent entire cube structures (instead of flat tables) to the visualization interfaces.

- Interactive performance for BI workloads: Last, but certainly not least, SASS’ multi-dimensional OLAP approach (where every possible combination of measure and dimension values is pre-aggregated) results in excellent performance, as long as the pre-aggregated results can be effectively stored and manipulated in memory and on a single “scale-up” computer – more on this below.

Challenges with Scaling SSAS

While SSAS has a number of characteristics that make it perfect for a number of traditional business intelligence workloads, it also has some architectural challenges that become prohibitive when working with big data workloads.

First, SSAS was designed as a scale-up software application. This means that if you require more processing horsepower, you add more CPUs and/or memory to the single machine running SSAS. If you need to store more data, you scale-out your local or SAN storage to store your cubes. This approach leads to increasingly expensive hardware requirements and a bounded limit on eventual system scalability.

This scale-up approach leads to operational challenges with SSAS. When AtScale’s founders used SSAS at Yahoo and scaled it to support a 24TB cube, we eventually ran into a wall. This “wall” manifested itself in a few ways. First, the amount of time required to process the multi-dimensional SSAS cube became prohibitive. This resulted in significant delays in data availability – sometimes it would take 2 to 3 days before data was ready to be analyzed. Second, the cubes became so large that it wasn’t feasible to store all data in a single cube, and as a result we partitioned our cubes into multiple cubes, broken down by quarter. This meant that analysts had to connect to multiple cubes to perform simple business analysis use cases, such as looking at seasonal fluctuations.

The SSAS “pre-computation-of-all-possible-data” approach has some inherent challenges when it comes to big data. While the benefits of MOLAP (an easy-to-use interface, great performance for pre-aggregated data) are easily derived on smaller data sets, when large data sets with large dimensions (tens of millions of SKUs, tens of millions of sensor ids, hundreds of millions of customers) enter the equation, the resulting “dimensional explosion” of data makes the MOLAP architecture untenable.

Technically speaking, a cube is actually a formal mathematical construct, and the definition of the size of the cube is the number of indivisible facts it contains. That basically means the number of addressable rows times the number of attributes available for each row, summed over all base fact tables. So, for a cube that contains 100 million customers, 1 million product skus, 2 years of daily data, customers that may come from 1,000 different campaigns in 100 different countries – the theoretical total size of this cube might be 7,300,000,000,000,000,000,000 cells.

Now, of course in real data sets there will be customers that are limited to specific countries, customers won’t buy every product, and customers won’t purchase on every single day. But as anyone who has worked with large dimensional data sets can tell you, even on real data sets the dimensional explosion of this data in MOLAP environments essentially renders these architectures impractical.

Scaling Out, Not Up, with Hadoop

At the same time we were struggling to scale SSAS at Yahoo, we were also starting to experiment with a new (at the time) scale-out data storage and processing technology to deal with tens of billions of daily ad and clickstream events. This new technology was called Hadoop. Today, Hadoop has become one of the fastest growing data technologies and is experiencing broad adoption across industries ranging from financial services to retail to digital marketing.

At Yahoo we quickly developed an appreciation of Hadoop’s key architectural advantages. First, the Hadoop Distributed File System (HDFS) allowed us to use a cluster of commodity servers to provide low-cost, reliable and scale-out storage of our advertising data and clickstream logs. As data volumes grew, we added more servers to the cluster. If a single server failed, HDFS’ redundant storage design ensured the data was available on a different server in the cluster. Additionally, Hadoop (via distributed processing frameworks like MapReduce) provided a “shared-nothing” architecture for massively scalable and parallel processing of computing tasks, such as scanning trillions of rows of ad impressions, aggregating these impressions by campaign id, and calculating the number of views and clicks associated with each campaign.

As Hadoop became more widely adopted outside of Yahoo and developed into a more mature technology, the opportunity to tackle the traditional BI workloads handled by SSAS became a possibility. We created AtScale to make to make scale-out BI on Hadoop a reality.

A Vision for Scale-Out BI / OLAP

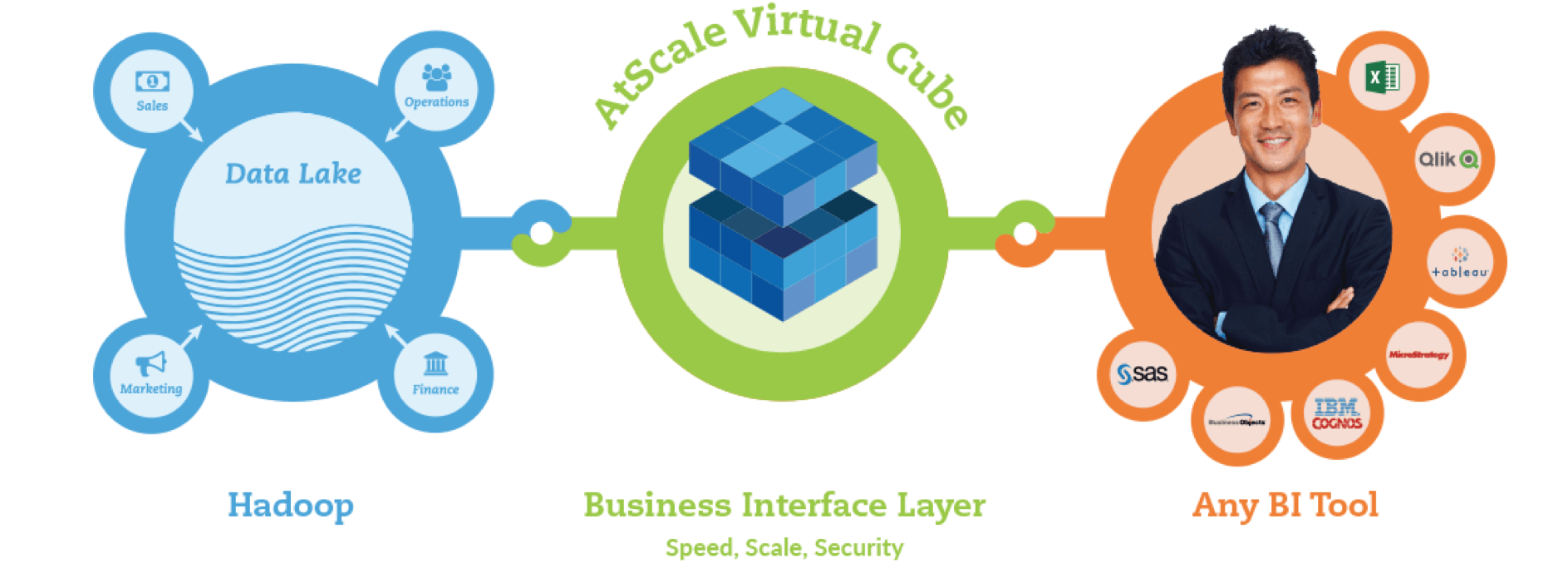

Scale-out BI architecture on Hadoop

AtScale was created with a specific vision – to deliver all the great capabilities SQL Server Analysis Services has to offer, and to do so at “big data scale”. The AtScale architecture and design was driven by several key design principles:

- Leverage Hadoop for it’s strengths. AtScale’s vision from the beginning was to deliver the scale-out storage and processing capabilities of Hadoop to support business intelligence workloads. As such, we haven’t created proprietary data structures or data processing engines that are specific to AtScale. Rather, we use Hadoop-native data formats and query engines to support AtScale-generated BI workloads.

- Play the data where it lays. It was frustrating for us at Yahoo! that we had to make our big data “small” and move it off of the Hadoop cluster and into scale-limiting cubes and data marts for it to be consumable for BI. AtScale allows BI tools to query the data directly where it sits, on the Hadoop cluster, without requiring any data movement.

- P__reserve the business-friendly interface of the cube metaphor.__ While legacy OLAP architectures may be out-dated and struggle with scale, the business concepts of measures, dimensions, and hierarchies are the way business users want to consume and express their BI data and queries. AtScale has been created to present the same cube richness as SQL Server Analysis Server, and is an XMLA-compliant data provider.

- Integrate with SQL and MDX BI tools. AtScale’s Hybrid Query Service supports both SQL and MDX query syntax. This means that business users who are familiar with using Microsoft Excel and live pivot tables against SSAS can easily use Excel to query AtScale cubes, effectively using Excel to directly query billions of rows of data on Hadoop with interactive response times. AtScale’s rich MDX support means that advanced query semantics, such as support for first & last child metric calculations.

- Preserve the performance profile of SSAS. AtScale’s patent-pending Adaptive Cache architecture is able to deliver the acceptable query response time that business users have come to expect. The AtScale Adaptive Cache ensures that business user queries can be answered interactively, directly from the Hadoop cluster. The result is a SSAS-like experience without the traditional architectural overhead related to data processing and MOLAP cube storage.

The Vision Becomes Reality

Today, AtScale is working with dozens of enterprises that have decided to move their traditional business intelligence infrastructures to a Hadoop-native, scale-out architecture. A number of these customers have specifically engaged with AtScale to solve their challenges with scaling out SQL Server Analysis Services.

Here are just a few real-life stories of scaling-out BI on Hadoop.

- A customer in the financial services space is using AtScale to support fraud & credit risk analysis on over 2 billion rows of transactional data. With a large user base of Microsoft Excel users, it was critical that AtScale be able to support direct connectivity while reducing the overhead and latency of their legacy SSAS system, which took 4 days to refresh and was struggling to support highly dimensional queries. With AtScale, the risk & fraud team benefitted from much faster “time-to-insight” through interactive virtual cube access to the credit card transaction data directly where it lives in Hadoop.

- A customer in the digital marketing space wanted to scale their shopper behavior analysis efforts to look at over 50 billion rows of data. With internal analysts using Excel and Tableau, and external reporting in Microstrategy, it was critical to have a shared semantic layer (the AtScale virtual cube) that could be shared across all data visualization front ends. With AtScale’s XMLA-compliant interface, both Microstrategy and Excel are able to connect to and query the same virtual cube using MDX. More advanced analysts can use AtScale’s tight integration with Tableau to query the same cube using SQL. The result is a shared semantic layer that delivers performance, security, and consistency for all data consumers, both internally and externally.

Today, with AtScale, it is now possible to get the rich semantic layer and query functionality of SQL Server Analysis Services along with the split-second response time that end users require on the largest of data sets. As our recent BI-on-Hadoop Benchmark study revealed, the combination of the AtScale Intelligence Platform and the scale-out nature of Hadoop means that the traditional scale limits of SSAS can be overcome. We’re excited to continue to work with enterprises that are looking to tackle this challenge!

I hope you find this interesting and helpful. Let me know what you think, comment or email me if you have questions, or visit us at atscale.com to learn more.

SHARE

Guide: How to Choose a Semantic Layer