Dimensional modeling is a technique for organizing data into facts (the metrics you measure) and dimensions (the context around those metrics), enabling faster, easier analytics.

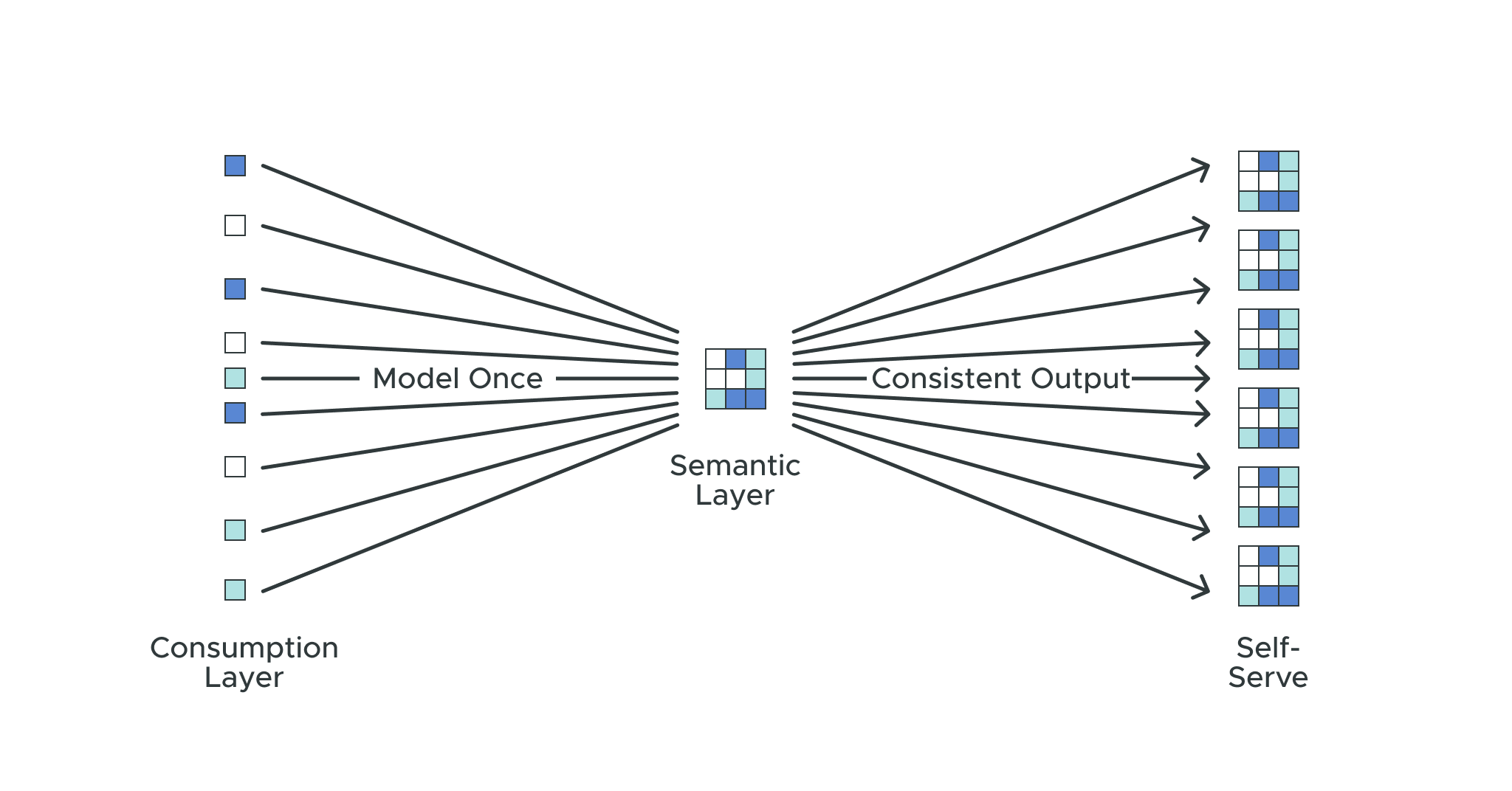

The semantic layer sits above the dimensional model and translates your business rules into consistent definitions used across all tools and platforms your team uses. With both of them working together, you have the base from which your data can tell the same story, no matter how anyone may query it (Power BI), feed it (AI model), or pull it (spreadsheet).

Most companies have an overwhelming amount of data that’s accessible but practically unusable. You’ve got analysts writing different SQL for the same metric. Engineers rebuilding the same logic in five different places. Business users waiting days for answers that should take minutes. The old approach — normalized schemas built for transactions, not analysis — forces everyone to become a data detective just to answer basic questions.

Shifting to dimensional modeling with a semantic layer changes that equation. You define your business metrics once, model them in a way humans actually understand, and let the semantic layer handle the translation work. Your analytics leader gets consistent KPIs. Your data engineer stops rewriting the same transformations. Your modeler builds something that actually scales. And your infrastructure team finally achieves the desired level of data governance without slowing everything down.

Why Traditional Data Modeling Often Fails in Modern Environments

A legacy of normalized databases is built to record transactions, but they often fail to answer complex business questions at a large scale. The result is information spread across many related tables, leading to intricate joins, fragile queries, and slow performance when analysts explore large datasets or build interactive dashboards.

For executives and analytics leaders, this results in delayed decision-making, ongoing debates over which figures are accurate, and conflicting dashboard metrics (even when they’re all sourced from the same enterprise systems). Modelers typically respond to these limitations by adding ad hoc logic into reports or through SQL, which only creates additional inconsistencies and increases the amount of technical debt going forward.

In practice, a normalized warehouse can cause the exact opposite of what the business expects: slower time to insight, confusing metric definitions, and a widening gap between those who understand the data model and everyone else who depends on it.

What Is Dimensional Modeling (and Why Does It Matter Now)?

Dimensional modeling is a process for organizing data into facts and providing consistent context for those facts through applying dimensional characteristics. Typically, these models use either star schemas or snowflake schemas, where fact tables sit at the center surrounded by dimension tables that share common, conformed definitions across your organization.

The approach creates business-oriented, logical data models that map directly to physical data in your warehouse or lakehouse without moving anything. Instead of forcing analysts to navigate dozens of normalized tables, dimensional models present data in terms people already understand, such as customers, products, time periods, and regions.

This is especially important in both cloud and AI-based environments. Dimensional models provide a means to productize analytics, feed consistent data into machine learning, and use a semantic layer to interpret business rules one time, versus having to build each rule individually for each different tool. This enables your modelers to create measures and hierarchies that your data engineer can rely on, and provides your analytics lead with metrics that have the same meaning throughout the entire organization.

Introducing the Semantic Layer: Connecting Data and Insights

The semantic layer serves as a vital bridge between complex data sources and end-users seeking actionable insights. It accomplishes this by offering a well-defined, business-friendly layer that provides a single version of truth, simplifying data access, ensuring data consistency, and promoting data-driven decision-making.

The semantic layer harmonizes all metrics, hierarchies, and business logic once, then distributes those definitions consistently to every analytics tool, AI application, or dashboard without physically moving data. For dimensional modeling, the semantic layer acts as the productization engine. Your modeler builds measures and dimensions in the semantic layer that map directly to the underlying star schema. At the same time, your data engineer maintains confidence that calculations stay consistent across Power BI, Tableau, Python notebooks, and LLM-powered chat interfaces.

This becomes critical for AI and machine learning. LLMs and AI agents need cleanly structured and semantically rich context to accurately produce insights and answer business questions. The combination of a dimensional model with a semantic layer provides this: clear facts, transparent dimensions, and metadata that tells systems what the data actually represents in business terms.

How to Move to a Dimensional Approach Using a Semantic Layer

Start by auditing your current analytics landscape. Ask questions like which reports matter most, which metrics drive decisions, and where inconsistencies cause the biggest headaches. The results of this audit reveal the highest priority business processes and tell you where dimensional modeling will immediately provide the most benefit.

Next, determine the grain for each fact table, or the specific level of detail represented by each measurement (e.g., all individual transactions vs. daily snapshots). Build your dimension tables around the attributes used by business users to filter and group by (e.g., customer segments, product categories, time periods, geographic regions). Use conformed dimensions that apply across multiple fact tables to avoid the common problem of “metric chaos.”

Once your dimensional model exists in your data warehouse or lakehouse, layer the semantic layer on top. Define business metrics, hierarchies, and calculated measures in the semantic layer so they map cleanly to your facts and dimensions. This is where “revenue,” “customer lifetime value,” or “monthly active users” get their single, governed definitions.

Lastly, validate with a pilot group. Modelers and analysts who know the data inside and out can implement this incrementally, linking one BI tool or application at a time. Data engineers can keep tabs on performance while analytics teams verify that the metrics are consistent. When everyone has access to the same set of semantic definitions (as opposed to writing custom SQL), governance becomes an automatic process.

Key Criteria of a Successful Semantic Data Model

To understand why dimensional data modeling is a core capability of the semantic layer, let’s explore the essential features that a modern semantic data model should possess.

Flexible and Advanced Multi-Dimensional Modeling Framework

Multi-dimensional data is a cornerstone of effective analytics. It enables users to analyze data from various angles, providing a comprehensive view. The semantic layer should enable multi-dimensional modeling on top of cloud data without physically moving or extracting data, empowering business users to perform traditional OLAP (Online Analytical Processing) analysis on their cloud data.

Welcome All Data Personas

A modern semantic data model should cater to the diverse needs of different data personas, such as BI developers, data engineers, citizen data scientists, and BI analysts. It should provide flexibility for both code-first data modelers and those who prefer a no-code, visual approach to data modeling.

Support Composable and Versionable Semantic Objects

Taking inspiration from object-oriented programming, a modern semantic model should enable data modelers to abstract real-world business entities into composable and versionable objects. This approach promotes modularity, reuse, flexibility, and effective development, which allows metrics, dimensions, and entire models to be shared, composed, and versioned with Git integration.

Be Intelligent and Serve Third-Party Semantic Models

Data can be messy, and semantic modeling should be intelligent, providing real-time guidance on modeling issues. Furthermore, the semantic layer should seamlessly integrate and serve other third-party semantic models, facilitating a unified hub for all semantic models within an organization for BI and analytics consumption.

In the modern data stack, dimensional data modeling stands as a core capability of the semantic layer. It provides a flexible, multi-dimensional modeling framework and welcomes all data personas. Additionally, it supports composable and versionable semantic objects and exhibits intelligence while accommodating third-party semantic models. This empowers organizations to harness the full potential of their data.

As businesses continue to rely on data and analytics for strategic decisions, mastering dimensional data modeling is essential to stay competitive in today’s data-driven landscape.

Benefits of Shifting to Dimensional Modeling Plus a Semantic Layer

By combining dimensional modeling and a semantic layer, you can transform how your company accesses, analyzes, and uses data. These optimizations will positively impact your operational use of data and technology performance. Here’s why:

- Faster time to insight: Users are able to ask questions about the data using terms they are familiar with (i.e., customers, products, dates), and do not have to wait for an analyst to create custom SQL code or explain complicated joins to execute the query. Because dimensional models are optimized for analytical loads rather than transactional loads, the execution speed of the query is much faster.

- Consistent metrics: You define “revenue”, “churn rate,” or “customer lifetime value” in the semantic layer once, and it will be calculated consistently across all tools/reports such as Power BI, Tableau, Excel, Python, and AI reporting. This eliminates executive debate regarding which report/dashboard is providing the correct metric when all tools are pulling from the same governed definitions.

- True self-serve analytics: Dimensional models combined with a semantic layer allow business users to analyze data without requiring knowledge of SQL or having to contact your data team for every inquiry. Analytics leaders now have the adoption of their BI investment that they had hoped for.

- Future readiness: LLMs and AI agents require semantically rich and structured context to produce reliable answers. Dimensional models provide clean facts and dimensions. However, the semantic layer provides the business metadata that describes what the data actually represents.

- Reduced technical debt: Data engineers no longer have to rebuild the same logic in five different places because business logic resides in the semantic layer and doesn’t exist within multiple reports and/or pipelines. Additionally, when business logic changes, you only need to modify one location versus searching thousands of inquiries.

- Decoupling and scalability: By separating business logic from physical data storage in the semantic layer, you can reorganize your warehouse, migrate to a different cloud platform, or add new data sources without negatively impacting downstream reports.

The Drawbacks and How to Overcome Them

Shifting to dimensional modeling with a semantic layer solves many problems, but the transition itself brings real obstacles that organizations should anticipate. Modeling data directly within consumption tools compounds these challenges in predictable ways:

- It’s hard: Modeling at the consumption layer requires advanced SQL knowledge and a deep understanding of raw data structures, limiting who can actually generate insights from the data. Fewer data consumers have enough expertise to work with raw, unnormalized schemas.

- Lack of clear business definitions: If you have an active debate in your company about what a “qualified lead” is, or what an “active customer” means, then ambiguity will exist regardless of how nice your dimensional model looks.

- Ungoverned semantic layers: Without version control, clear ownership, and change management processes for your semantic layer, it will become a source of confusion rather than truth.

- It wastes time: Data models built inside individual BI tools are not typically shared, forcing each analyst to reinvent the wheel for every new analysis instead of spending time on actual insight generation. Data consumers end up spending more time on data preparation than on analyzing data and generating insights.

- It creates inconsistency: Because different users define data models and calculations differently within each tool, terminology and query results diverge. That makes it nearly impossible to maintain consistent, trusted insights across the organization.

- Legacy technical debt: Years of accumulated reports, dashboards, and pipelines built on normalized schemas or ad hoc queries create dependencies that are difficult to unwind.

How to Overcome Them

- Work from the top down: Take the highest priority use cases and start migrating them first, rather than trying to make a large-scale replatforming effort at once. This will lower your risk of failure and allow you to get some successes under your belt before you take on additional use case migrations.

- Establish governance early: Before or when you are building your semantic layer, all of your cross-functional teams should be aligned on how to define metrics. The data team needs to work with their respective business stakeholders to define what conformed dimensions look like and how they should be calculated.

- Treat your semantic layer like production code: Just like any other piece of production code, implement peer review, testing environments, version control, and rollback capabilities to ensure that changes don’t cause problems for downstream dependencies. Establish clear ownership and change management processes to help avoid chaos as the system grows.

- Invest in organizational change: Dimensional modeling with a semantic layer is going to change the way modelers, engineers, analysts, and business users do their work. Create training, document patterns and best practices, and celebrate successes along the way to build momentum within each team.

The Role of AI and Machine Learning in This Shift

To create a meaningful answer or insight, an LLM or other AI system needs to work with high-quality, structured, semantically rich data. Dimensional models create this foundation of facts organized around specific dimensions, which AI agents can query in a consistent way to avoid generating misleading or inaccurate statistics based on how they interpret your business’s context.

A semantic layer combined with your dimensional model provides generative AI and analytics agents the business metadata they need to determine your company’s single definition of “revenue,” “customer churn,” etc. With consistent definitions that remain stable through training runs, and with the ability of AI-powered chat interfaces to accurately map natural language queries to accurate questions, you’ll achieve AI-ready data that is governed, explainable, and structurally usable for both human analysts and intelligent agents.

Key Takeaways

- Facts capture measurable events while dimensions provide context, creating structures that humans and AI systems can actually understand.

- Complex joins, inconsistent definitions, and slow query performance create delays, confusion, and endless metric debates.

- Define metrics once in the semantic layer, and those calculations work identically across every tool, dashboard, and AI application.

- Start with high-value use cases, establish governance early, and migrate incrementally rather than attempting an overnight transformation.

- LLMs and AI agents need well-structured, semantically rich data to generate accurate insights without hallucinating metrics.

- Business users get self-service analytics, engineers reduce technical debt, and executives finally get consistent metrics.

Enable Consistent, Governed Analytics with AtScale’s Semantic Layer

The AtScale semantic layer platform connects your dimensional models to cloud data warehouses and lakehouses, enabling consistent business metrics across all BI tools and AI applications without moving your data. Whether you’re modernizing legacy analytics or building out AI-ready infrastructure, AtScale will help you implement governance around your dimensional models that scales across your entire organization. Contact us to learn how AtScale enables this shift and receive an assessment of the current state of your analytics architecture.

SHARE

Case Study: Vodafone Portugal Modernizes Data Analytics