How do you move from experimental AI pilots to production systems you can trust? That was the central question Kyle Morton and I discussed on last month’s EDM Association webinar.

The data management community is keenly aware of what’s at stake with AI. And the answer isn’t necessarily in the models themselves. It’s in the semantic foundation that makes AI deterministic. Organizations that neglect that foundation will continue to face unpredictable outputs, inconsistent results, and governance challenges. In that environment, AI is a liability, not an asset.

Here, I’ll walk through key themes from the session, explain why semantics are critical for enterprise AI, and outline where this tech is headed next.

The Trust Gap Without Semantics

The numbers are hard to ignore: When you unleash a large language model on enterprise data without any semantic context, getting accurate results is nearly impossible. On top of that, users need to be aware of the probabilistic nature of LLMs, which may yield different answers from one query to the next.

LLMs are being asked to understand custom schemas, navigate complex joins, identify the correct grain, and compute business metrics they’ve never seen before. They don’t come with an inherent understanding of your organization’s business logic. Without a semantic layer to provide that logic, they’re guessing. And those guesses have consequences.

Based on our testing, when a query requires joining more than four or five database tables, LLMs guess wrong 80% of the time. That’s an unacceptable failure rate for systems intended to support decision-making, automated agents, or customer-facing experiences. When you use AI without semantics, governance and risk are on the line.

Semantic Layers are AI’s Deterministic Foundation

To address the trust gap, you need to eliminate ambiguity. Instead of asking an LLM to reverse-engineer business meaning from raw tables, the semantic layer exposes data in business terms that the LLM can understand.

For example, “gross margin” is not a calculation that AI can be expected to compute on its own. Business metrics are unique to each organization, and an LLM trained on the general Internet cannot be trusted to understand your business logic.

Instead, we can make the job easier for the LLM by offloading complex calculations and database schema navigation to the semantic layer, thereby improving accuracy to 92-100%. Besides improving accuracy, the semantic layer also makes business queries deterministic by ensuring that calculations and database joins are constructed and executed consistently for every query.

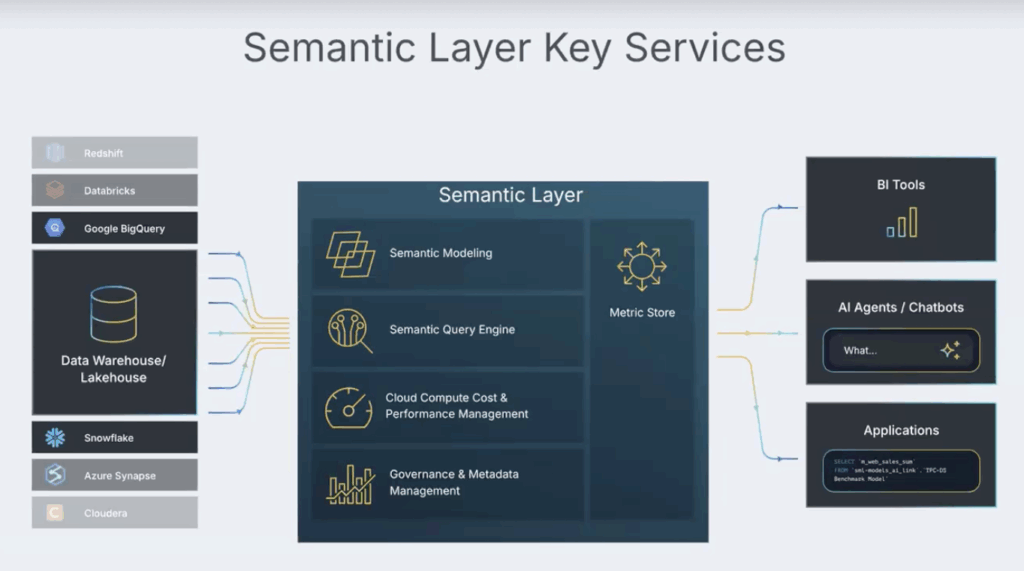

A universal semantic layer does this by delivering four key services:

- Metric Store – Defines business measures and dimensions in clear, governed terms.

- Semantic Model – Maps physical data structures into business concepts.

- Semantic Query Engine – Translates natural language or logical queries into accurate, repeatable physical database queries.

- Governance Control Plane – Applies role-based permissions, masking, and row-level filters consistently across all consumers.

These components ensure that every query, whether it’s a dashboard, an application, or an AI agent, follows the same rules. The semantic layer defines an organization’s business logic once and reuses it everywhere.

How Knowledge Graphs and Semantic Layers Work Together

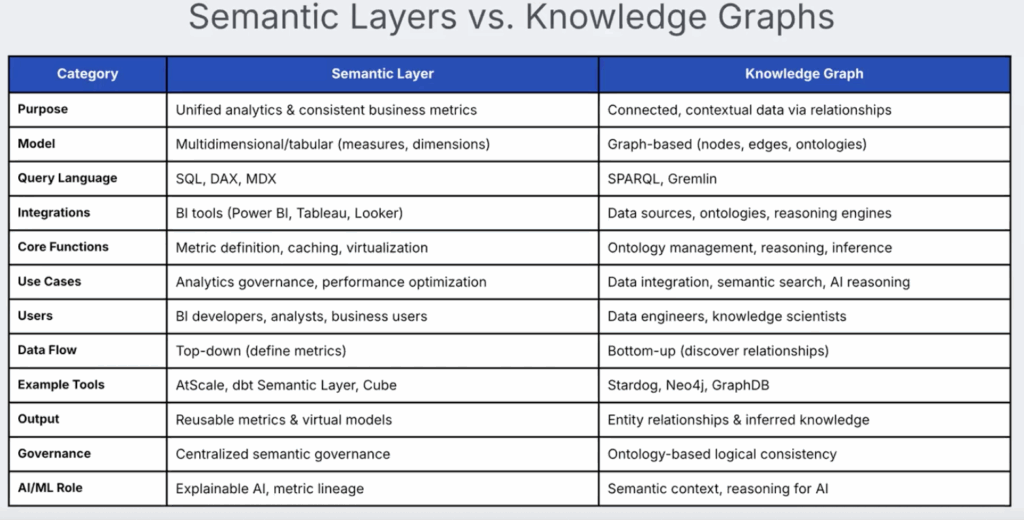

One question that came up during the discussion was how semantic layers differ from knowledge graphs. Each plays a distinct role as shown in the chart below.

Semantic layer platforms and knowledge graph tools complement each other. In fact, at AtScale, we leverage knowledge graphs for our query planner to help our query engine navigate relationships efficiently and predictably. In short, semantic layers directly answer business analytics questions, while knowledge graph tools map relationships between entities and provide tools for navigating and visualizing those entity relationships.

The Model Context Protocol (MCP) and AI Access to Data

Another key development in the AI space is MCP, a standard mechanism for AI systems to interact with external data platforms. MCP exposes the following concepts to an LLM:

- Tools: Executable actions (APIs, functions) that the LLM can invoke to do things.

- Resources: External data or objects the model can read for context.

- Prompts: Predefined templates/workflows that guide LLM behavior.

It’s a bit like how JDBC provided a common standard for database connectivity. AI needs the same standardization for connecting to enterprise data.

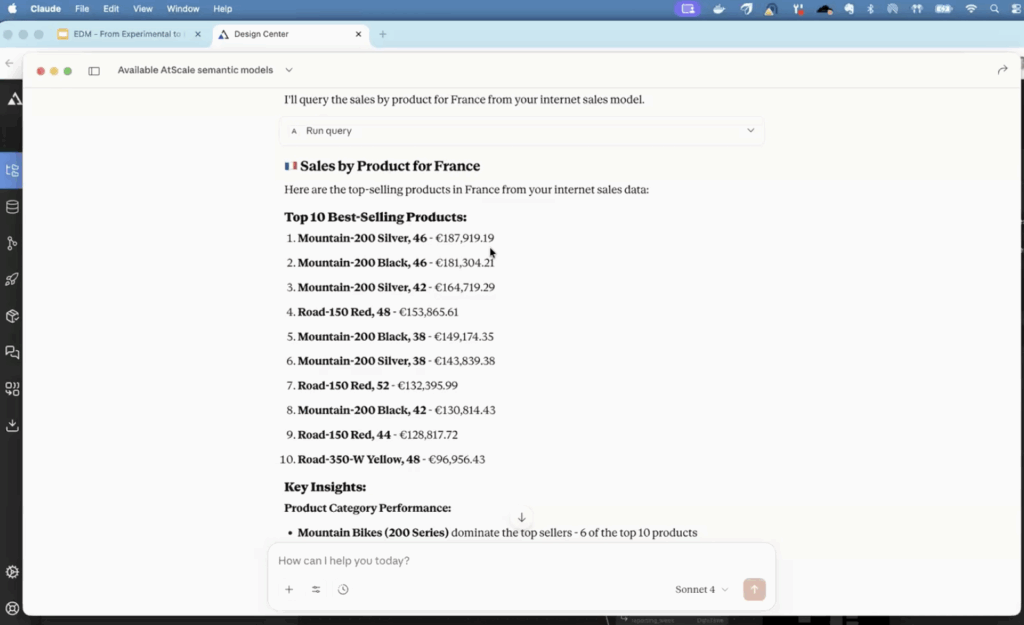

During the webinar, I showed how Claude uses AtScale’s MCP server to discover available semantic models, understand their structure, and run governed queries. No custom integration or proprietary interfaces needed.

When I asked, “Show me sales by product for France,” Claude didn’t attempt to infer how to join tables or identify currency conversions. It relied on the semantic layer to supply the correct SQL, governed result set, and business definitions.

But here’s where it gets interesting. When I prompted, “Tell me something about sales I don’t know,” Claude autonomously generated a series of queries, surfacing:

- High-value customer segments

- Geographic behavior patterns

- Correlations between product color and purchasing behavior

Generating these insights manually using a BI tool would take an analyst days or weeks. With a semantic layer and an LLM, AI can create queries and interpret their results autonomously to surface insights using the context of your business.

The Future: AI That Builds Semantic Models

We’re entering a new phase of data maturity. Soon, AI will not only query semantic models, but also create them.

Using AtScale’s open-source Semantic Modeling Language (SML), LLMs can generate semantic objects programmatically to automate semantic model building and drive consistent business definitions across the enterprise. Rather than hand-crafting semantic models, resource-strapped teams can leverage AI to propose and refine semantic definitions while maintaining strict governance and transparency.

How to Get Started

One participant asked: “How do I start this conversation if I don’t have decision-making authority?”

The answer’s the same as it was 20 years ago: Start with the business need. There’s a lot of hype around AI, but AI alone isn’t the entry point. It’s about:

- Trusted data and analytical results

- Consistent business definitions

- Bullet-proof governance and guardrails

The data management community has always understood that trust starts with a good foundation. The semantic layer is the foundation for the AI era.

Thanks to Kyle and the EDM Association for having me. You can watch the full webinar here and explore how semantic layers make AI trustworthy for your organization.

SHARE

Case Study: Vodafone Portugal Modernizes Data Analytics